Digital Occupation: Pro-Russian Bot Networks Target Ukraine's Occupied Territories on Telegram

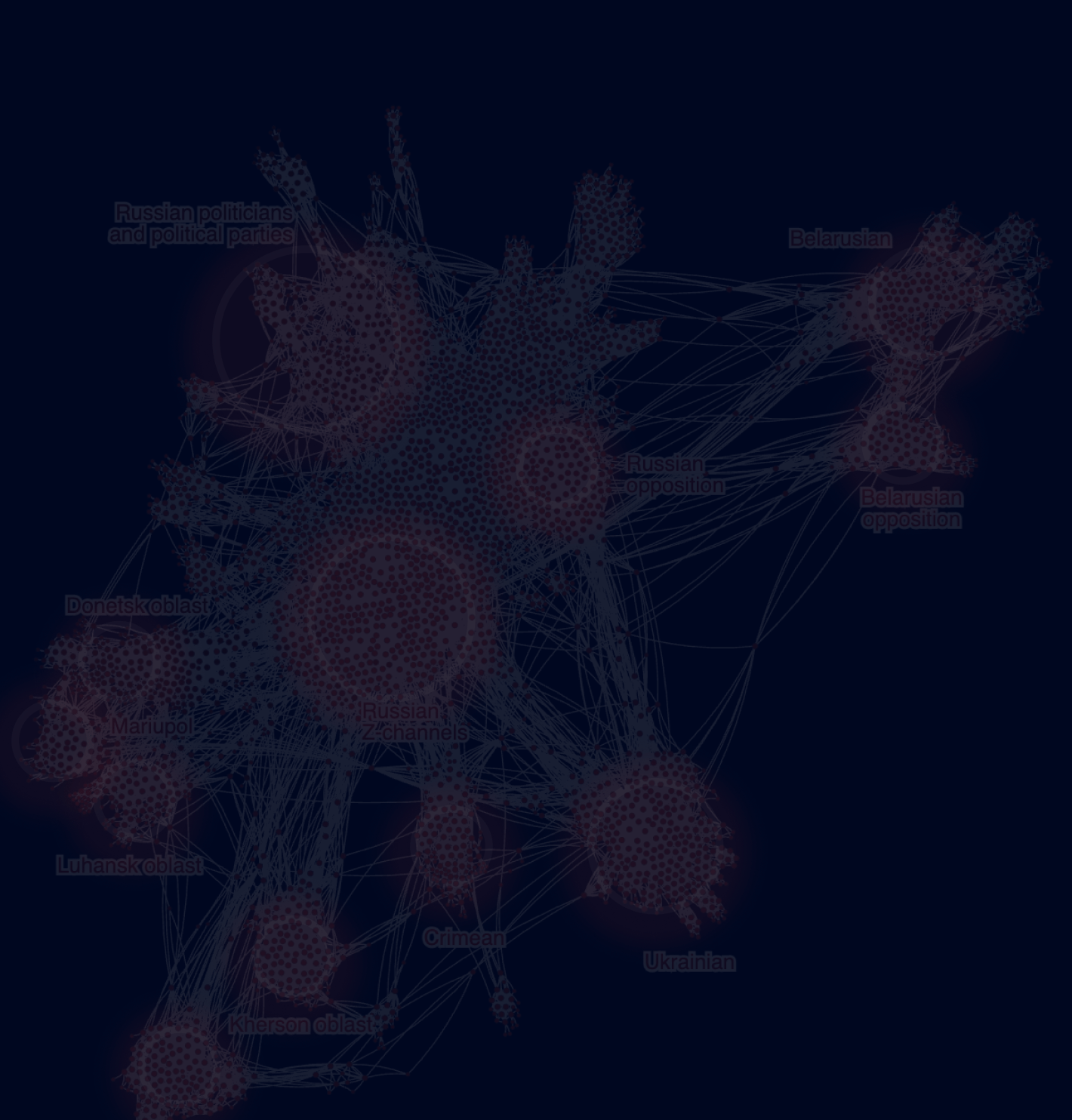

Russia is flooding Telegram with inauthentic comments to shape public opinion in Ukraine’s temporarily occupied territories. We identified a network of more than 3,600 Telegram bots powered by generative AI that targeted local channels with messages praising Russian governance, discrediting Ukraine, and simulating grassroots support for the occupation.

This is a joint investigation by OpenMinds and the Digital Forensic Research Lab (DFRLab). The full report is available on the Atlantic Council’s website.

A Telegram account with the username @nieucqanдoberr offers a striking example of how short-lived yet high-impact bot activity works. The account was active for just one day – May 11, 2024 – during which it posted 1,391 comments across sixty-five Telegram channels and chats. Twenty-nine of those messages were made in channels based in Russian-occupied areas of Ukraine. The bot’s activity spanned from 09:49 to 23:47 Moscow time, averaging one comment every thirty-six seconds.

Its messaging covered a broad spectrum of themes common to Russian propaganda: praising Putin as the world’s strongest leader, portraying NATO as the manipulator behind Ukraine’s decisions, accusing Zelenskyy of killing his own people, claiming Ukrainians glorify Nazi slogans, or celebrating Russia’s global partnerships, including its growing ties with Africa.

Around 400 of its comments can be classified as pro-Russian propaganda, while nearly 1,000 were either anti-Ukrainian or anti-Western (with a small portion being neutral).

Unlike simplistic bots that spam identical messages, this account adapted its content to the context of each conversation. It pushed various narratives while responding to previous messages and tailoring its replies to reinforce pro-Kremlin talking points.

For instance, in one thread it wrote that “What a blessing that we have such a wise and reasonable president!” while elsewhere it blamed the West for escalation, claiming that “everything happening in Ukraine is a conflict planned by the West.” Other comments promoted Russia’s global role, stating that “logistical barriers between Russia and Africa are being removed, which will significantly boost trade this year.”

The chart below shows the intensity of this single account's activity over a single day across its most-targeted channels.

A Bot Network of 3,634 Accounts

This one-day blitz was not an isolated case. In our joint investigation with Digital Forensic Research Lab (DFRLab) we identified 3,634 automated Telegram accounts that posted pro-Russian comments from January 2024 to April 2025. These bots targeted Ukrainian populations inside territories temporarily occupied by Russia (TOT) and were responsible for over 316,000 comments in TOT-related channels alone. More than 3 million others appeared across Ukrainian and Russian Telegram groups and chats.

On average, these bots posted 84 comments per day per account. Some of the most active accounts exceeded 1,000 comments in a single day. The volume alone made these accounts stand out, but they also displayed other signs of inauthenticity: nonsense usernames, mismatched profile pictures, recycled narratives, and overly formal or garbled phrasing suggesting use of generative AI. In fact, every third bot comment was unique, rewritten by AI to simulate human-like language and avoid detection.

Occupation Begins with Information Control

The spread of automated comments on Telegram is part of a broader Russian strategy to dominate the information space in the occupied territories. Since the early days of occupation, Russia has forcibly switched local residents to Russian telecom providers, cut off Ukrainian media, and launched dozens of Telegram channels masquerading as local news outlets.

By 2023, the Telegram ecosystem in occupied areas had expanded to more than 600 channels. These channels worked in tandem with newly created ministries of information, regional media holdings, and traditional news outlets operated by Russian appointees. Their role was not only to disseminate propaganda but also to simulate normalcy and create the illusion of public support for occupation.

In this restricted environment, where Telegram often becomes the primary source of news, the manipulation of comment sections takes on added significance. A large volume of seemingly “local” messages expressing gratitude to Russia or disdain for Ukraine helps create what researchers call a “manufactured consensus.”

But what exactly does this consensus consist of?

What the Bots Say: From Infrastructure to Ideology

The bot messages fell into three broad categories:

- Positive propaganda promoting Russia and its government;

- Hostile anti-Ukrainian propaganda, including narratives that portray Ukraine as a failed state, personal attacks on Ukrainian President Volodymyr Zelenskyy, and accusations of terrorism, among others;

- Neutral messages ranging from comments with emojis only, to neutral statements about the horrors of war and the need for peaceful coexistence between nations (without directly pushing pro-Russian narratives).

Pro-Russian propaganda narratives ranged from general praise of the country’s leadership, economy, or military to more localised messages about improvements made by specific politicians or political parties.

This category can be roughly divided into two subsets. The first is general pro-government content found across various Telegram channels, including support for Putin, admiration for the Russian army, discussions of national unity, and mentions of Russia’s strong diplomatic ties with countries such as India and those in Africa.

The second type is more specific to the occupied territories and focuses on local issues, including repairs to infrastructure, access to medicine, reconstruction efforts, the work of local administrations, quality of mobile connectivity, and support for curfews. These narratives aimed to portray life under Russian control as improving and to highlight the state's active assistance to people in the region.

When isolating the share of pro-Russian narratives among all comments, the accounts promoted this type of messaging – praising Russia’s leadership, infrastructure, and support for occupied territories – almost twice as often in occupied territory channels than in other channels where the bot accounts were also active.

From Zelenskyy to NATO: Hostile Messaging Still Present

Alongside these efforts to depict Russia as a benevolent force, the bot network also carried out aggressive anti-Ukrainian and anti-Western campaigns. Around 45,000 comments (one in six) targeted President Zelenskyy personally. Figures such as Antony Blinken, Boris Johnson, Joe Biden, and Donald Trump appeared regularly, often in the context of blaming the West for escalating the war or exploiting it for geopolitical or economic gain.

In contrast, pro-Russian propaganda was far less personalised. With the notable exception of Putin, who was consistently praised in comments for his leadership, stability, and support for the occupied territories, such messaging rarely revolved around specific individuals. Instead, it tended to highlight institutions, infrastructure, or abstract notions like national strength and unity.

Yet even in the absence of named individuals, anti-Ukrainian comments promoted broader hostile narratives – most notably accusations of Nazism and extreme Ukrainian nationalism. This particular narrative, however, gradually declined in frequency over time, suggesting either a shift in messaging priorities or a decrease in its perceived effectiveness.

Campaigns Aligned with Events

Some bot messaging was clearly reactive, aligning with major events. After the launch of a Ukrainian offensive in the Kursk region in the summer of 2024, bots shifted to praising Russian aid efforts and urging residents not to panic. After the Crocus City Hall terrorist attack in Moscow, bots defended Russian security services and blamed Ukraine. During Finland and Sweden’s NATO accession, bots downplayed the threat.

In the occupied territories, similar surges occurred around specific campaigns, not events: in March 2024, bots flooded channels with existential fears about World War III and accusations that Ukraine had sabotaged peace talks. In May 2024, they launched a coordinated wave of praise for Putin, framing him as the savior of the “new Russian regions.”

One persistent theme was the restoration of basic services – water, electricity, heating – often framed as Russian achievements. Even when the services themselves remained unreliable, the messaging was constant.

By flooding Telegram with tens of thousands of inauthentic comments, Russia aims not just to inform or mislead, but to create the illusion of widespread support for its occupation. In an environment where Ukrainian media is largely inaccessible, this illusion becomes a powerful tool of control.

While bot activity is sometimes short-lived, its effects are not. Every day, locals encounter comment sections that present pro-Kremlin narratives as the majority view. Even when bot accounts are deleted, their influence lingers – shaping local discourse, distorting perceptions, and complicating efforts to reach Ukrainian citizens with accurate information.

The full version of the report, including in-depth findings and methodological details, is available on the Atlantic Council website.

.svg)

.jpg)

.avif)

.avif)

.avif)

-01-2.avif)

-01.avif)

-01.avif)

-01%25202-p-500.avif)